As project managers, we are taught to manage the triple constraint of scope, schedule (or time) and … Read More

Most Active Project Managers on Twitter

Using Scraperwiki, I analyzed the top project management tweeters for a month (March 15-April 14, 2014). I gathered tweets using the #pmot hashtag. Other hashtags such as #pm, #projectmanagement and #pmi are common but #pmot seems to be the standard for Project Management Online Tweets.

The overall stats for this 31-day period:

- 10,288 tweets in total

- 330 tweets per day on average

- 1,585 distinct Twitter screen names

The highest number of tweets, by far, was from @PMVault with 2,285 tweets, nearly 1,700 more than the second most. However, @PMVault just tweets job postings so it is useless for most PMs. I eliminated it from the rest of the analysis.

The rest of the top 10 looks like this:

[table id=1 /]

Additional information is in this table:

[table id=2 /]

If Your PM Works 50-60 Hours Per Week, Then They are Doing It Wrong

Many of the PMs that I talk to tell me that they are so, so busy – averaging 50-60 hours per week or more. To be provocative, I say “You must be a really bad project manager.” My point being is that if you are unable to manage your own time effectively, why should you be trusted to manage other people’s time?

To be sure, many people exaggerate the number of hours they work. Averaging 60 hours per week of work is damn hard. I suspect people include their commute in that number. And some confuse at work with work. But I’m sure some people really work 60 hours per week. And that means they are doing it wrong.

My main point is above – why are you so bad at managing your time? I’m positive you did not schedule yourself for 60 hours per week – probably 40 hours (or slightly less) like everyone else on the project. So if you’re scheduled for 40 hours and work 60 that means your estimates are off by 50%! And more shocking is that estimates for how long it takes you to do something are going to better than estimates for the other team members. So your entire schedule is going to be off by more than 50%. Interestingly, the average schedule overrun is 60% (according to the Standish Group).

Assuming your PM is working 60 hours per week, I am concerned that they are either doing menial tasks or the quality of the work is low. Research proves that the quality of work goes down the more hours that are worked. This is common sense – we make more mistakes at 9pm than we do at 9am. Now, if a PM is doing menial tasks (you know what I’m talking about – PowerPoint slides, tweaking Microsoft Project just one more time, etc.), the decrease in quality may not occur or be noticeable. But that is also concerning. As a PM, I work on the hard things – the risks and issues that require concentrated effort and innovative thinking. I can’t be effective if I’m doing this at midnight – my solutions will not be elegant or functional. If your PM can consistently work 60 hours per week with no noticeable decrease in quality then they aren’t focused on the right things.

In any particular year, I work unplanned overtime just a few times per year. (Note the word unplanned – because some IT project work must be done on weekends, I plan to work several weekends per year.) If I see a PM working a large number of hours, it’s a red flag to me.

If your PM is consistently working 60 hours per week then they are doing it wrong.

Providing the Best Advice to Clients

I base my advice to clients on three things:

- Standards

- Peer-reviewed research

- Non peer-reviewed research

Too many consultants base their advice on personal experience. Personal experience is, of course, necessary and valuable but not sufficient. As the put-down goes: the plural of anecdote is not data. You must have a scientific reason to support the advice you provide.

Standards

I use standard methodologies when I manage projects. Typically this is the PMBOK framework, or Scrum for Agile projects, or the INCOSE V model for systems engineering, etc. I learned to do this by doing the opposite at the beginning of my career when I was working for one of the Big Four consulting firms. At that firm, we used proprietary methodologies. To a degree, clients hired us because of that – before the internet, best practices were not widely known and you had to hire a consulting firm to get that knowledge. But it also locked our clients into working with us – breaking up or switching vendors would be very difficult. That didn’t seem right to me so when I started Terrapin in 2003, I committed to only using standard, open methodologies. It makes it very easy for me to transition work to my clients.

It is good to base advice on standards because of the vast amount of data that surrounds the standard. The standard itself has been developed and vetted by many experts in the field. And then companies that adopt the standard produce data that is used to improve the standard. For example, PMBOK is on its fifth edition.

Using standards also helps on-board new employees or vendors. If you use PMBOK then you know for a fact that every PMP in the country (600,000+) knows how to manage projects within your organization.

Peer Reviewed Research

I use peer-reviewed research to underpin my advice too. Most peer-reviewed research is scientifically valid. The methodology and analysis are well thought-out and, by definition, it has been reviewed by others in the field. The biggest problem with peer-reviewed research in project management is there is so little of it. PM is not treated well by most universities. Economics, finance, operations, marketing and other disciplines receive massive attention and funding. I suspect there are just a handful of active researchers in PM.

I will be highlighting the most impactful research I have ever read on this blog.

Non Peer Reviewed Research

Non peer-reviewed research can be as valuable as peer reviewed. With peer-reviewed research, you know someone has vetted it. With non peer reviewed research, you have to do it yourself. So I tend to rely on research from established sources that I trust. The biggest source is Gartner – they produce excellent research. I also enjoy the annual CHAOS Report from the Standish Group.

The large consulting firms such as McKinsey, Deloitte and PWC also produce research. Their research tends to be survey-based. They usually survey their clients, Fortune 1000 companies, so the results are skewed towards big company topics. But the results are beneficial and can be useful when understanding the opinions of CIOs in certain areas.

Affordable Care Act Website, US Edition

Following my post on the Oregon ACA website and the Maryland ACA website project failures, I am following up with the federal ACA website project. Unlike Oregon, there has not been a (publicly-available) thorough audit of the failure. Some data points have been widely reported: 70% of users could not even log in at launch and only eight people were able to sign up on day 1. I’ve read where stress testing before launch indicated the site could only handle 1,500 users – even though over 30 million Americans are uninsured. I also understand the team was not co-located (White House in D.C., CMS in Baltimore, contractors in Columbia, MD) and decision-making was difficult.

Steven Brill has written a story at Time (gated, here). It’s mostly about the team sent in to rescue the project. The first thing the rescue team was to develop a dashboard. Brill quotes one saying it was “jaw-dropping” that there was no “dashboard – a quick way to measure what was going on at the website…how many people were using it, what the response times were…and where traffic was getting tied up.” Implementing a dashboard was job 1 for the rescue team.

Brill notes “what saved it were …stand-ups.” He further says that “stand-ups…are Silicon Valley-style meetings where everyone…stands-up rather than sits….” Brill implies that California software companies invented stand-ups. While I have no doubt that stand-ups are popular in Silicon Valley, I doubt they were invented there. I worked for an admiral (Craig Steidle) in the ’90s that did daily stand-ups and stand-ups are a key component of the Scrum methodology. Many, many companies use stand-ups. I guess journalists are unfamiliar with them.

I look forward to a real audit of the project. Brill’s article is entertaining but not illuminating as to the problems that plagued the project. The federal ACA website project has common characteristics with state-led ACA failures – poor choice in contractors, poor project management, no risk management, lack of a single point-of-authority and poor oversight. Perhaps we can have the GAO review the effort – we must learn form these large IT project failures, otherwise we are doomed to repeat them.

Affordable Care Act Website, Maryland Edition

I recently documented the failings of the Oregon ACA website. Now its Maryland’s turn. Maryland has commissioned an audit so we should get a good inside look. But the audit is not scheduled to be done until the summertime. We do know a lot about this failure already. According to documents from the IV&V contractor obtained by the Washington Post, these problems existed early in the project:

- Insufficient State staffing

- Insufficient time for software development

- Lack of a detailed project plan

- Inefficient communications

- Lack of sufficient time for regression and system testing

- Lack of a comprehensive QA and testing plan

The prime contractor, Noridian, has been terminated. A substantial amount of their fees has been withheld by the state.

There was also significant issues between Noridian and one of their subcontractors, EngagePoint. Interestingly, I read where Noridian hired EngagePoint since it had no real ability to develop the exchange. I don’t know why Maryland awarded a contract to a company that had to quickly hire a subcontractor to do the work.

Most recently, Maryland decided to scrap the $125M effort and adopt the same technology used in Connecticut. Adopting the CT exchange technology is expected to cost an additional $50M. It makes me wonder why some states didn’t just do join together in the first place. Or staged a phased implementation so mistakes could be corrected before a nationwide launch.

Affordable Care Act Website, Oregon Edition

There is a fantastic audit of Oregon’s attempt to implement the Affordable Care Act (ACA) (“Obamacare”). Highlights include:

- Oregon combined ACA implementation with a complex project that determines if people eligible for healthcare subsidies are also eligible for other government programs. To state the obvious: you NEVER combine a high risk project with another high risk project.

- Oregon chose Oracle as the software provider and paid them on a time and materials basis. Contractors are usually paid on a deliverable or milestone basis so the risk is shared.

- Oregon paid Oracle through a contract vehicle called the Dell Price Agreement. The name alone indicates this may not be the proper way to contract with Oracle. In total, Oregon signed 43 purchase orders worth $132M to Oracle.

- Oregon did not hire a systems integrator, a common practice in these types of projects.

- Oregon hired an independent QA contractor, Maximus. Maximus raised numerous concerns throughout the project. They never rated the project anything but High Risk.

- Lack of scope control, a delay in requirements definition, and unrealistic delivery expectations were cited as main issues.

- As late as two weeks before the October 1 launch, the Government PM was positive about the project: “Bottom Line: We are on track to launch.”

Who Keeps Moving the Goal Post?

One interesting point in the audit is the difficulty in accurately estimating how much effort it takes to do software development. As they were progressing, they were developing estimate-to-completion figures. As shown in the chart below, the more work they did, the higher the estimate-to-completion got. As they got closer to the end, the end got farther away. D’oh! In general, this shouldn’t happen. The more “actuals” you have, the better your estimates should be.

Who Knew this Project Would Fail? These Guys Did.

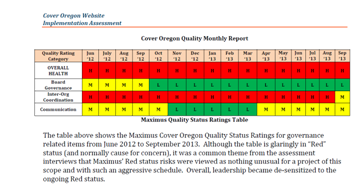

The audit quotes the QA contractor, Maximus, throughout the report. They appeared to have a good grasp on what was happening, pointing out risks throughout the project. A summary of their risk assessments is shown below. The top row, which is Overall Health, is red (high risk) from Day 1. Unfortunately, the report says the project team became “de-sensitized” by the risk reports since they were always bad. Perhpas Maximus should have started with a green (low risk) status.

If One Oversight Group is Good, More Must Be Better

There were several agencies that had oversight responsibilities. Naturally this caused confusion and blurred lines of responsibility. A common refrain was the lack of a single point-of-authority. The audit doesn’t make a recommendation but I will: Oversight should be the responsibility of a single group comprised of all required stakeholders. I have seen many public sector projects that believe additional levels of oversight are helpful. It is not. They serve to absolve members of responsibility. They can always say they thought someone else was responsible for whatever went wrong. If there is only one oversight group, then they can’t point fingers anywhere else and they are more likely to do their job well.

Like Hitting a Bullet with Another Bullet

As noted above, Oregon combined ACA implementation with a complex project that determines if people eligible for healthcare subsidies are also eligible for other government programs.

The Standish Group produces a famous report (the CHAOS Report) that documents project success. The single most significant factor in project success is size. Small projects, defined as needing less than $1M in people cost, are successful 80% of the time while large projects, over $10M, are successful only 10% of the time. (Success is defined as meeting scope, schedule and budget goals.)

So Oregon decided to combine the high risk Obamacare website project, with a projected success rate of 10%, with another high risk project with success rate of 10%. That’s like hitting a bullet with another bullet.

Everything was On Track, Until It Wasn’t

As late as two weeks before launch, the Government PM reported that the launch was on track. The audit notes the system failed a systems test three days before launch and the launch was delayed the day before launch. (It appears the system test conducted three days before launch was the first system test performed.) Even while announcing the delay, Oregon said the site would launch in two weeks (mid-October). By November, the launch consisted of a fillable PDF form that was manually processed. The site had yet to launch six months later (March 2014).

There is a common story told in project management: “how did the project get to be a year late!” “One day at a time.” By April before the October launch, the project was months behind. As the spring and summer progressed, the project fell further behind. And yet, the PM continued to believe they would “catch up” and finish on time. I don’t know if its ignorance or malfeasance at work here. But it is virtually impossible to “catch up.”

One funny (in a sad way) part. The development effort was planned for 17 iterations. So what happened when they completed iteration 17 and still weren’t done? Iteration 17a, 17b, and 17c. Ugh. Also, the use of the word iteration implies an Agile methodology but this isn’t indicated in the audit. It wouldn’t surprise me that Agile was abused misused used on this project.

The audit has many lessons learned. I encourage you to read it all, especially if you are undertaking a large system implementation in the public sector.

GAO Guide to Scheduling

The GAO has produced an excellent guide to scheduling. It is extensive (200 pages!) and stems from their audits of thousands of government projects. The appendix even includes audit questions that can be used to gauge adherence to the best practices.

It starts with four characteristics of a good schedule:

- A comprehensive schedule includes all activities for both the government and its contractors necessary to accomplish a project’s objectives as defined in the project’s WBS. The schedule includes the labor, materials, and overhead needed to do the work and depicts when those resources are needed and when they will be available. It realistically reflects how long each activity will take and allows for discrete progress measurement.

- A schedule is well-constructed if all its activities are logically sequenced with the most straightforward logic possible. Unusual or complicated logic techniques are used judiciously and justified in the schedule documentation. The schedule’s critical path represents a true model of the activities that drive the project’s earliest completion date and total float accurately depicts schedule flexibility.

- A schedule that is credible is horizontally traceable—that is, it reflects the order of events necessary to achieve aggregated products or outcomes. It is also vertically traceable: activities in varying levels of the schedule map to one another and key dates presented to management in periodic briefings are in sync with the schedule. Data about risks and opportunities are used to predict a level of confidence in meeting the project’s completion date. The level of necessary schedule contingency and high priority risks and opportunities are identified by conducting a robust schedule risk analysis.

- Finally, a schedule is controlled if it is updated periodically by trained schedulers using actual progress and logic to realistically forecast dates for program activities. It is compared against a designated baseline schedule to measure, monitor, and report the project’s progress. The baseline schedule is accompanied by a baseline document that explains the overall approach to the project, defines ground rules and assumptions, and describes the unique features of the schedule. The baseline schedule and current schedule are subject to a configuration management control process.

Then the guide has ten best practices for good scheduling:

- Capturing all activities. The schedule should reflect all activities as defined in the project’s work breakdown structure (WBS), which defines in detail the work necessary to accomplish a project’s objectives, including activities both the owner and contractors are to perform.

- Sequencing all activities. The schedule should be planned so that critical project dates can be met. To do this, activities need to be logically sequenced—that is, listed in the order in which they are to be carried out. In particular, activities that must be completed before other activities can begin (predecessor activities), as well as activities that cannot begin until other activities are completed (successor activities), should be identified. Date constraints and lags should be minimized and justified. This helps ensure that the interdependence of activities that collectively lead to the completion of events or milestones can be established and used to guide work and measure progress.

- Assigning resources to all activities. The schedule should reflect the resources (labor, materials, overhead) needed to do the work, whether they will be available when needed, and any funding or time constraints.

- Establishing the duration of all activities. The schedule should realistically reflect how long each activity will take. When the duration of each activity is determined, the same rationale, historical data, and assumptions used for cost estimating should be used. Durations should be reasonably short and meaningful and allow for discrete progress measurement. Schedules that contain planning and summary planning packages as activities will normally reflect longer durations until broken into work packages or specific activities.

- Verifying that the schedule can be traced horizontally and vertically. The detailed schedule should be horizontally traceable, meaning that it should link products and outcomes associated with other sequenced activities. These links are commonly referred to as “hand-offs” and serve to verify that activities are arranged in the right order for achieving aggregated products or outcomes. The integrated master schedule (IMS)should also be vertically traceable—that is, varying levels of activities and supporting subactivities can be traced. Such mapping or alignment of levels enables different groups to work to the same master schedule.

- Confirming that the critical path is valid. The schedule should identify the program critical path—the path of longest duration through the sequence of activities. Establishing a valid critical path is necessary for examining the effects of any activity’s slipping along this path. The program critical path determines the program’s earliest completion date and focuses the team’s energy and management’s attention on the activities that will lead to the project’s success.

- Ensuring reasonable total float. The schedule should identify reasonable float (or slack)—the amount of time by which a predecessor activity can slip before the delay affects the program’s estimated finish date—so that the schedule’s flexibility can be determined. Large total float on an activity or path indicates that the activity or path can be delayed without jeopardizing the finish date. The length of delay that can be accommodated without the finish date’s slipping depends on a variety of factors, including the number of date constraints within the schedule and the amount of uncertainty in the duration estimates, but the activity’s total float provides a reasonable estimate of this value. As a general rule, activities along the critical path have the least float.

- Conducting a schedule risk analysis. A schedule risk analysis uses a good critical path method (CPM) schedule and data about project schedule risks and opportunities as well as statistical simulation to predict the level of confidence in meeting a program’s completion date, determine the time contingency needed for a level of confidence, and identify high-priority risks and opportunities. As a result, the baseline schedule should include a buffer or reserve of extra time.

- Updating the schedule using actual progress and logic. Progress updates and logic provide a realistic forecast of start and completion dates for program activities. Maintaining the integrity of the schedule logic at regular intervals is necessary to reflect the true status of the program. To ensure that the schedule is properly updated, people responsible for the updating should be trained in critical path method scheduling.

- Maintaining a baseline schedule. A baseline schedule is the basis for managing the project scope, the time period for accomplishing it, and the required resources. The baseline schedule is designated the target schedule, subject to a configuration management control process, against which project performance can be measured, monitored, and reported. The schedule should be continually monitored so as to reveal when forecasted completion dates differ from planned dates and whether schedule variances will affect downstream work. A corresponding baseline document explains the overall approach to the project, defines custom fields in the schedule file, details ground rules and assumptions used in developing the schedule, and justifies constraints, lags, long activity durations, and any other unique features of the schedule.

PMI Salary Survey Analysis

I analyzed the PMI Salary Survey results. The eighth edition was released in 2013. I wanted to analyze the change in PM salaries over time. I have surveys from 2000, 2009, 2011 and 2013. I wish I had surveys from other years but the internet has failed me (PMI, too) and I can’t find them.

At first, I looked at the median salary for all PMs in the U.S. over time.

That makes sense – salaries have gone up. But median salary is too broad. Next, I looked at median salary by years of project management experience. The Survey says this is a bigger factor on salary than years of overall experience.

Again, salaries have increased over the last 13 years.

Or have they?

As the chart title says, this is not adjusted for inflation. Even though inflation has been low, averaging less than 2.5% over the last 13 years, it still adds up. Here is the data adjusted for inflation (showing salaries in 2013 dollars).

Yikes! It shows that salaries have decreased over time, or, at best, remained flat! How is this possible?

I have two theories. First, perhaps salaries from 2000 are artificially high due to the dot-com craze. PMI just happened to survey PMs when they were receiving outlandish salaries. Second, perhaps the job title of PM has been adopted by many more people that are dragging the salaries down.

PM Salaries in 2000 Were Boosted by the Dot-Com Craze

While this phenomenon was true – I got a ridiculous salary offer in 2000 – I don’t think it was wide-spread and it didn’t last long. PMI surveys PMs across the U.S. – I doubt salaries increased much in Dubuque due to the dot-com craze. I don’t think there would be a large spike in salaries.

Plus, salaries tend to be sticky. Once you get a raise, you tend to keep it even if your company does worse in the future. If salaries really did go up significantly, I’d expect to see that impact for….ever. Again, that was true in my case. I got a large salary in 2000 and I continued to make more money after that with every subsequent job.

Conversely, maybe the salaries of 2009, 2011 and 2013 are artificially low due to economic issues.

Waiter, Actor, Project Manager

There’s a joke in Hollywood that every waiter or waitress is an actor. Perhaps the same thing is happening to PMs. Maybe the definition of PM expanded so much in the 2000s that there are many, many more types of PMs. The 2000 survey may have been weighted towards technical PMs – computer science, IT, aerospace and Defense, construction. Technical PMs in these industries make more money than other types of PMs. The later surveys may have included more PMs that managed marketing or investor relations projects. Or perhaps, these PMs are more accurately called business analysts. And, maybe, these types of PMs earn less.

The New Normal?

Or maybe, PM salaries have truly gone down. The U.S. Census Bureau shows that median household income went down about 2% between 2000 and 2010. My favorite economics blogger, Tyler Cowen, has written on The Great Stagnation. We PMs are not immune.

NASA’s Lessons Learned for Project Managers

NASA has a great set of lessons learned here. I sometimes call “lessons learned” “lessons not learned” since many organizations make the same mistakes over and over.

Here are my favorites from the list:

- All problems are solvable in time. Make sure you enough schedule contingency or the project manager that takes your place will.

- People who monitor work and don’t help get it done never seem to know what is going on.

- Sometimes the best thing to do is nothing.

- Never assume that someone knows something or has done something. Ask them.

Ignore the Triple Constraint

My colleague at the Stevens Institute of Technology, Thomas Lechler, did research on creating business value through projects. The paper, The Mindset for Creating Project Value, is available for free for PMI members at www.pmi.org. He argues the PMs should shift their mindset from the triple constraint to creating business value.

Here is Dr. Lechler’s hypothesis: projects measure success against the triple constraint: scope, schedule and cost. If a project did what it was supposed to do, on time and on budget, then it was a success. Business, however, don’t measure success this way. They are looking for other things such as increasing revenue, decreasing expenses, increasing customer satisfaction, and serving more people (for a charity, perhaps). It’s easy to imagine situations where these different definitions of success conflict. Microsoft’s Kin cell phone may have been a project success but it tanked in the marketplace. Apple’s iPhone could have failed each of the three project success measures but nobody cares because it created massive value for the organization.

Dr. Lechler compares PMs with a triple constraint mindset vs. a project value mindset. PMs with a triple constraint mindset will naturally force a project to its baseline. They want to achieve project success. PMs with a project value mindset are more likely to identify and pursue project changes that may negatively impact the project but ultimately benefit the organization. Interesting data and I encourage you to read it.

I must stress that it is critical that businesses select projects wisely and that PMs know the strategic business goals for the project. Usually, the PM is in the best position to know if a project is going to achieve business value.

Despite the headline, a PM should not ignore the triple constraint. But PMs must remember that projects are undertaken to achieve a goal and the goal is more may be important than the triple constraint.

- « Previous Page

- 1

- …

- 3

- 4

- 5

- 6

- Next Page »