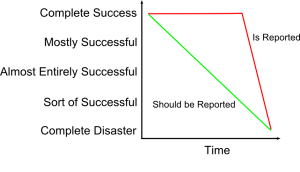

There have been a few high-profile project failures recently that highlight the danger in status reporting. It seems many projects are reported as low risk and on schedule all the way up until the time they are catastrophic failures. There is no progression from good to bad just a very sudden failure. In fact, many (most) projects fail incrementally. Past performance IS a predictor of future performance for projects. Projects that fall behind early rarely recover and finish on time.

The first example is from the federal government’s project to develop the Healthcare.gov website to satisfy the requirements in the Affordable Care Act (aka “Obamacare”). Major government IT projects are tracked on www.itdashboard.gov. The dashboard from August 2013 (three months before launch) shows the status as nearly all green (good). The overall project rating is green and it is rated a 5 (out of 5, with 5 being the best). The dashboard includes six cost and schedule variance assessments – five are green and one is yellow. It also includes operational performance and other “investment” metrics – most are “met.”

Now, I am sure this is not the main status reporting mechanism used by the Healthcare.gov project team but this one sure is misleading – and it’s on a public website for everyone to see (this dashboard exists even today – nearly six months after the failed launch).

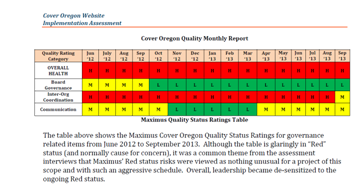

The second example is California’s recent payroll implementation project. The status report before its pilot program launch shows 15 areas – 14 are assessed as green and one is yellow. The pilot program launch went poorly just three months later and the entire project was suspended 11 months later.

In both of these cases, what went wrong and how did it go wrong so quickly? The fact is that neither of these projects failed suddenly – both were high risk when these status reports were published. But the status reports painted a rosy picture that may have led leaders astray as to the true status.