As project managers, we are taught to manage the triple constraint of scope, schedule (or time) and … Read More

Nothing to See Here, Move Along

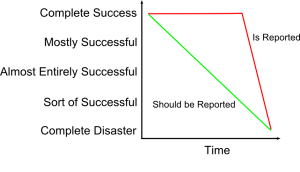

There have been a few high-profile project failures recently that highlight the danger in status reporting. It seems many projects are reported as low risk and on schedule all the way up until the time they are catastrophic failures. There is no progression from good to bad just a very sudden failure. In fact, many (most) projects fail incrementally. Past performance IS a predictor of future performance for projects. Projects that fall behind early rarely recover and finish on time.

The first example is from the federal government’s project to develop the Healthcare.gov website to satisfy the requirements in the Affordable Care Act (aka “Obamacare”). Major government IT projects are tracked on www.itdashboard.gov. The dashboard from August 2013 (three months before launch) shows the status as nearly all green (good). The overall project rating is green and it is rated a 5 (out of 5, with 5 being the best). The dashboard includes six cost and schedule variance assessments – five are green and one is yellow. It also includes operational performance and other “investment” metrics – most are “met.”

Now, I am sure this is not the main status reporting mechanism used by the Healthcare.gov project team but this one sure is misleading – and it’s on a public website for everyone to see (this dashboard exists even today – nearly six months after the failed launch).

The second example is California’s recent payroll implementation project. The status report before its pilot program launch shows 15 areas – 14 are assessed as green and one is yellow. The pilot program launch went poorly just three months later and the entire project was suspended 11 months later.

In both of these cases, what went wrong and how did it go wrong so quickly? The fact is that neither of these projects failed suddenly – both were high risk when these status reports were published. But the status reports painted a rosy picture that may have led leaders astray as to the true status.

Evaluating IT Performance

Following is a process to evaluate an IT department. Similar to project management, I find that many organizations fail at some of the basics.

- Hardware

- Inventory all hardware, particularly servers and their purpose (many companies don’t know what they own)

- Software

- Inventory software and their purpose (look for “shadow apps” – apps that people have been installed locally – these pose a greater security threat)

- Network

- Document network architecture (vulnerabilities are easier to spot if the architecture is documented)

- Information Security

- Review status of routers, firewalls, firmware updates, anti-virus software and other equipment designed for security (companies struggle to keep up with the latest versions of security devices and software)

- Hire a company to evaluate (hack) you to determine vulnerabilities

- Projects

- Document the purpose and status of every project (status of projects is likely to be worse than commonly believed)

- Align projects to strategy (some won’t align to your strategy and should be terminated)

- Evaluate business value for each project

- Help Desk

- Review help desk statistics (software is better and people are smarter – help desk requests should be trending downwards)

- Disaster Recovery

- Review the results of the latest DR test (many companies fall behind on DR testing and they fail to make changes based on the results)

- Review the restore process (the business is frequently unaware of how long it will take to restore certain operations in a disaster)

- Organization

- Review the org structure and the people in leadership roles

- Processes

- Evaluate common processes for effectiveness (PM processes, security, backups, help desk, etc.)

- Process Improvement

- Review the regular status reports that are used to measure and manage IT (ensure IT is measuring things that are important – not just things that are easy to measure)

Failure to Act: SF-Oakland Bay Bridge Project

The Sacramento Bee has a(nother) article on the San Francisco – Oakland Bay Bridge project. It details how a Chinese construction company did not weld steel girders to the requirements, potentially jeopardizing the structural integrity of the bridge. Some of the eye-opening facts:

- ZPMC, the Chinese construction company hired to build the bridge girders had never built bridge girders before! They were awarded the contract because their bid was $250M below the next closest bid. Naturally the final cost was well over $250M higher.

- Caltrans, the government agency in charge, allowed ZPMC to ignore both welding codes as well as contractual requirements

- ZPMC was not penalized for failure, in fact, they were paid extra to work faster

- Several government workers were re-assigned after pointing out quality issues

- One lead executive for the government quit and joined a company involved in the project

The article implies government waste as government people lived quite well when traveling to China to check on manufacturing. It also implies criminal wrong-doing when it states the law enforcement officials are looking into things.

This project reminds me of the Maryland health exchange project in that an unqualified company won the contract (see here). I don’t know how government procurement officials could get this so wrong.

It also highlights one of the pitfalls in project status reporting. As noted here, even when risks are identified, leaders frequently fail to act.

Cost of the Health Exchange Websites

Vox published a report from Jay Angoff, a former Obama administration official who is now at a law firm. The Angoff report examines the federal cost of the health exchange websites and then calculates the cost per enrollee in each state. The headline is deliberately enticing: “Hawaii’s Obamacare cost nearly $24,000 per enrollee.”

There are two problems with the Angoff’s analysis, one major and one minor but still significant:

- The report takes the $2.7B cost of the federal website and divides it equally between the 36 states that used the federal website.

- The report does not include state spending or spending by non-government groups.

Major Flaw: Federal Spending is Allocated by State

The Vox article does not mention the math where the $2.7B federal price tag is divided equally by 36 states ($75M per state). This is a significant omission. This assumption is explained in the report:

Administrative costs are allocated 1/36 to each of the 36 states, regardless of population, because the technology necessary to operate the Exchange is substantially similar in each state regardless of its population. To the extent that non-technology costs are higher in larger states, this allocation overstates cost-per-enrollee in smaller states and understates it in larger states.

No, no, no! This is a poor assumption! I would not allocate the cost equally to each state. That would be like taking an F-22 fighter jet and allocating its cost by state. After all, the technology to operate it is the same in every state!

I took the analysis and revised it by two methods. First, it would be better to allocate cost by enrollee. This makes sense as the enrollees should “pay” for the site. The second run-through, I calculated cost by population which is a sensible way to allocate costs on something that is theoretically available to all. It may be best to allocate costs by the number of taxpayers per state but that gets a bit complicated.

The spreadsheet here shows the states that were included in the federal website, sorted by the cost per enrollee as in the Angoff report, then followed by two columns: 1) cost by enrollee if the costs were allocated by enrollee and not state and 2) cost per enrollee if the costs were allocated by population.

As you can see, there is a massive difference in the numbers. Because of Angoff’s methodology of dividing costs by state, the “most expensive” enrollees are all from states with small populations.

Below is my entire analysis. As a side note, I’m depressed impressed surprised about the amount of federal money that some states (Hawaii, New Mexico) were able to obtain.

Minor Flaw: Lack of State Spending in the Analysis

The report also suffers by lack of state spending and lack of spending data from non-government groups. While it may be difficult, or impossible, to get spending from non-government groups, state spending is easier to obtain. As an example, Maryland received $171 Million in federal dollars but added an additional $20M of its own money. The report underestimates Maryland’s cost by 15% – and Maryland’s costs are born solely by Maryland’s taxpayers (not equally by county if the report chose to make the same assumption as above).

Overall, the Angoff report is fatally flawed by making a poor decision to allocate federal costs by state and not including state spending. To its credit, Vox noted the missing data but it did not mention the assumption that grossly distorts the spending data for the health exchanges.

Teaching Project Management at Georgetown

I’m excited to join Georgetown University as an adjunct professor in the Systems Engineering Management master’s degree program. The program combines systems engineering courses from my former university, the Stevens Institute of Technology, and management and leadership courses from Georgetown.

The program is designed for people looking to manage or lead technical and engineering efforts, particularly in software, aerospace and defense, transportation, and healthcare. Top local employers of systems engineers include General Dynamics, SAIC, ManTech, CSC, Boeing and Booz Allen.

I’ll be teaching project management on Monday evenings in the Fall semester.

Biggest Failures in the ACA Health Exchange Website Projects

I’ve written about the failed U.S., Maryland , Oregon and Vermont ACA health exchange website projects. I’ve collected the biggest failures from each of the projects. Most of these findings come from the QA auditors hired for each project. It’s a litany of woe and there are similar issues on each project.

Vermont

- Project controls not consistently applied.

- Change Management: Appears to be very little control over changes in schedule, deliverables or scope. Impact to other project areas are not analyzed or alternatives presented.

- Current weekly status reports have little detail on dependencies, risks or issues.

- Schedule Delays: deliverables shifted to the future without going thru change management process; potential impacts not known or agreed to.

- Plan continues to be reworked and updated, there is no way to report on or assess progress. It is not clear what components will be delivered when. The dates seem to be unrealistic and unachievable. Some dates violate CMS schedule requirements.

- Development (and, later) test, (and, later) prod, (and, later) pre-prod, training and DR environments are not ready as planned.

- Requirements: lack of granularity in scope, definition and traceability; Insufficient detail; Amiguity; requirements not tied to work flows or use cases.

- Vendor staffing not as scheduled (20 unfilled positons) with no staffing plan.

- It is likely that functionality will not be tested until it is too late.

Oregon

- Lack of universally accepted PM processes

- Ineffective communication and lack of transparency

- Poorly defined roles and responsibilities

- Lack of discipline in the change management process

Maryland

- Lack of a schedule

- No single person as the overall PM

- Unclear oversight

- No documented list of features at go-live

- Code moved directly into production

- Lack of integration and end-to-end testing

- Insufficient contingency planning

ACA Website Project, Vermont Edition

I’ve written about the failed U.S., Maryland and Oregon ACA health exchange website projects. Here is Vermont.

Vermont’s contractor was CGI, who was also responsible for the U.S. project. The QA contractor was Gartner Consulting. Nearly all of the released findings stem from Gartner’s QA reports.

Similar to Oregon’s health exchange project, at no point in time did the QA contractor assess risk as anything but RED – high risk. For Vermont, it was 12 straight high risk bi-weekly status reports, the last one being after the October 1 go-live when the probability of most risks occurring hit 100%. The most critical risks throughout the project were:

- Planning. Gartner notes a lack of planning around integration – probably the hardest part of the project and testing – the most important part of the project.

- Project Management. A laundry list of project management issues: lack of project controls, appears to be very little control over changes in schedule, deliverables or scope. Impact to other project areas are not analyzed or alternatives presented, plan continues to be reworked and updated, there is no way to report on or assess progress. It is not clear what components will be delivered when. The dates seem to be unrealistic and unachievable. Some dates violate CMS schedule requirements.

- Scheduling. Numerous schedule issues (“missed delivery dates and milestones” is noted in 8 of 14 released QA reports) including one stunning problem probably caused by technical issues or poor management: Development (and, later) test, (and, later) prod, (and, later) pre-prod, training and DR environments are not ready as planned. Essentially no environment was ready as planned – NONE. Also: “Plan continues to be reworked and updated, there is no way to report on or assess progress. It is not clear what components will be delivered when. The dates seem to be unrealistic and unachievable. Some dates violate CMS schedule requirements.”

- Staffing. Within five months of go-live, Vermont had four unfilled positions, including Test and Training Leads and CGI had 20 (!) unfilled positions. Worse is that there was no staffing plan to address this gap.

In some cases, the QA reports include Vermont’s risk management strategy. One strategy is especially damning.

- Risk Rating: HIGH

- Risk: It is likely that functionality will not be tested until it is too late.

- Vermont’s Risk Response: Accept

My response: D’oh! This shows that Vermont was fully prepared to implement features that it had not tested. Unacceptable.

This audit is not as comprehensive as Oregon’s audit as it is almost entirely based on Gartner’s QA reports. And some of them have redactions that, of course, makes you wonder what is hidden – could it be worse? Nonetheless, more lessons learned when undertaking a large IT project.

Metrics to Improve Project Performance

I love Kaplan and Norton’s balanced scorecard concept and I have designed and implemented it several times for my clients. It calls for balancing traditional financial metrics such as cash flow and ROI with three additional dimensions: internal business processes (which I call operations), customers and learning and growth (which I call HR).

If your operations are projects (aerospace and defense, consulting and construction companies among others), then your metrics in internal business processes should be project-based metrics. I’ve developed a list of metrics that companies should use to monitor and improve project performance.

[table id=4 /]

The Pitfalls of Project Status Reporting

There is an excellent research article in MITSloan’s Management Review on the Pitfalls of Project Status Reporting. The article starts with recounting the launch of the federal health exchange healthcare.gov where many of the stakeholders thought the project was on track right before go-live. (I recently wrote about the Oregon health exchange where the project manager said two weeks before launch: “Bottom line: We are on track to launch.” The Oregon site never launched and after six months, Oregon abandoned the effort and is joining the federal site.)

The point of the article is that leaders can be surprised when IT projects run into trouble. The authors go through five “inconvenient truths.”

- Executives can’t rely on project staff and other employees to accurately report project status information and to speak up when they see problems. Their research shows that PMs write biased status reports 60% of the time and the bias is twice as likely to be positive as negative. For obvious reasons, PMs feel that personal success is related to project success so they are incentivized to paint a brighter picture. This tendency to hide bad news increases in organizational cultures that are not receptive to bad news and where the PM may feel personally responsible for the bad news (as opposed to, say, a contractor being responsible).

- A variety of reasons can cause people to misreport about project status; individual personality traits, work climate and cultural norms can all play a role. Within traits, the authors cite risk-takers and optimists as more likely to misreport status. A work climate that is based on professional rules of conduct is less likely to produce misreporting. And collective cultural norms, such as those found in the Far East ( as opposed to individualistic cultures such as the U.S.), are more likely to produce misreporting.

- An aggressive audit team can’t counter the effects of project status misreporting and withholding of information by project staff. Basically, it is possible for PMs and project teams to hide information from auditors, which is no surprise. Project teams do this when there is distrust of the auditors. This lack of trust also impacts internal reporting structures. If a PM does not trust the senior executive they report to, they are more likely to misreport.

- Putting a senior executive in charge of a project may increase misreporting. The greater the power distance between the person giving the status and the person receiving the status, the greater likelihood of misreporting.

- Executives often ignore bad news if they receive it. The authors found that in many cases, issues were identified and elevated to executives who had the power to resolve them but the executives did not act. Partially because the pressure on executives for the project to be successful can be the same, or greater, as the pressure on PMs. This is what happened in the Maryland health exchange project. The QA auditor wrote to the Executive Director two weeks before launch warning her of eight serious risks of going-live. The Executive Director downplayed the risks and the project continued towards its disastrous launch.

The article includes recommendations for each inconvenient truth and three useful attachments: 1) a survey to determine your risk of misreporting, 2) the cycle of mistrust that occurs between teams and auditors and 3) a list of common misreporting tactics (including selective highlighting and redefining deliverables).

Project (and portfolio) status reporting is a must-have for project-based organizations. This article provides valuable insight on how to make it effective.

An excellent article, the best in PM this year!

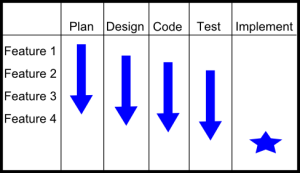

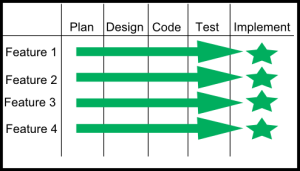

One Way to Understand Agile Methodologies

I describe the difference between the traditional waterfall methodology with an Agile methodology as the difference of going vertical vs. going horizontal. You can see this in the difference between the two pictures below.

The first picture represents the typical waterfall project schedule. You essentially do all the planning, then all the design, then all the coding, then all testing and then you implement. This is not exactly right – there is usually overlap between the phases (hence waterfall) but this is more or less accurate. I call this “going vertical.”

This next picture is how the same project would be done using an Agile methodology. You would take Feature 1 and plan, design, code, test, and, maybe, implement before moving to Feature 2. I call this “going horizontal.”

It’s easy to see the advantages of each technique. Agile (going horizontal) enables you to go through all phases early in a project. You discover coding or testing issues on the first go-around so you can improve each iteration. But the downside with Agile is you may discover something while designing Feature 4 that forces you to re-write early functionality. So you increase the probability of re-work but you lower risk of discovering issues late in the schedule..

In the traditional waterfall methodology (“going vertical”), you rarely have to re-design something because you have taken everything into account during the (one) design phase. Of course the issue with waterfall is you don’t discover coding or testing problems until late in the project schedule – maybe too late.

When appropriate, I use Agile since it lowers the risk of a project. However, Agile is not appropriate for many types of projects or in some companies and the majority of projects are still managed using a waterfall methodology.